Introduction

In today's data-driven business landscape, the ability to quickly extract insights from complex datasets has become a competitive necessity. Large Language Models (LLMs) have emerged as powerful tools for interacting with data through natural language, promising to democratize data analysis across organizations. However, these systems come with a significant challenge: hallucinations — the tendency to generate plausible but factually incorrect information.

For professionals who rely on data accuracy to make critical decisions, AI hallucinations aren't just technical glitches—they represent serious business risks. Data reports based on hallucinated data can lead to misguided strategies, wasted resources, and damaged credibility.

Introducing Datova

Datova is an AI-powered data analyst that allows users to interact with their data using plain English questions. Unlike conventional AI assistants, Datova was specifically engineered to eliminate the risk of hallucinations when providing data insights. The result is a system that combines the accessibility of conversational AI with the trustworthiness of traditional data analysis tools.

The Challenge of AI Hallucinations in Data Analysis

When applied to data analysis, traditional LLM approaches present several critical vulnerabilities:

-

Confabulation of Statistics: LLMs can confidently generate statistics and trends that look reasonable but have no basis in the actual data.

-

Invented Data Points: Without proper constraints, AI systems might "fill in gaps" in datasets with invented values.

-

Misremembered Context: Even when working with real data, LLMs can misremember or mix up information from different parts of a dataset.

-

Overconfidence: Most concerning is that hallucinated responses are often delivered with the same level of confidence as factual ones, making it difficult for users to distinguish between reliable and unreliable information.

-

For professionals whose decisions impact business operations, customer relationships, or financial outcomes, these risks are unacceptable.

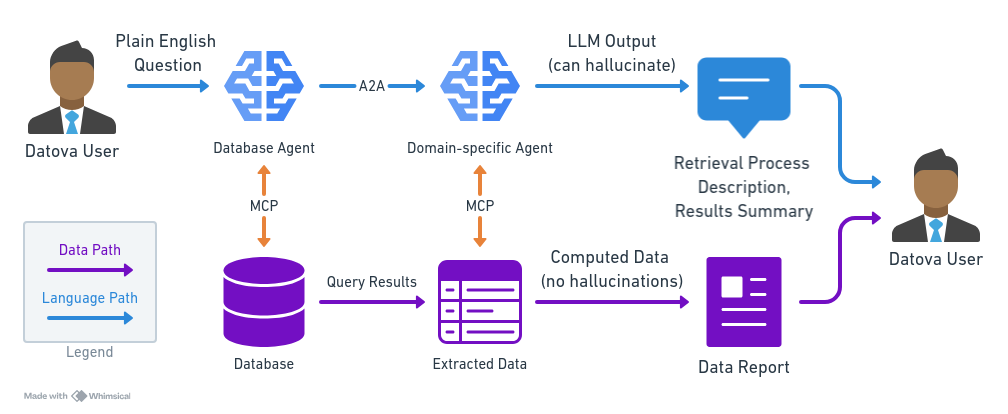

Datova's Solution: Separate Data Pathways

Datova tackles the hallucination problem through a fundamental architectural approach: separating the data pathway from the conversational AI pathway.

How It Works

-

Dual Processing Architecture: When you ask Datova a question, your query is processed along two separate pathways:

-

The conversation pathway handles your natural language interaction

-

The data pathway directly accesses, processes, and verifies information from your datasets

-

Direct Data Access: Instead of "remembering" your data, Datova maintains a direct connection to your actual datasets, executing precise queries against your real data in real time.

-

Truth-Anchored Responses: The system only provides information that can be directly linked to your actual data. If something cannot be directly verified, Datova will acknowledge the limitation rather than fabricate a response.

-

Transparent Processing: Each insight is traceable back to its source data, giving you confidence in the accuracy of every response.

Unlike general-purpose AI assistants that prioritize conversational fluidity over accuracy, Datova's architecture prioritizes data integrity above all else.

Real-World Benefits

This architectural approach delivers several practical benefits for professionals who rely on data:

-

Confidence in Decision-Making: Make business decisions based on verifiably accurate insights rather than plausible-sounding but potentially fabricated information.

-

Democratized Data Access: Empower team members across departments to access data insights without specialized technical skills—and without fear of receiving misleading information.

-

Time Efficiency: Get accurate answers in seconds instead of waiting for data specialists to run custom analyses or spending hours verifying AI-generated insights.

-

Reduced Risk: Eliminate the danger of making costly decisions based on hallucinated data trends or statistics.

-

Scalable Data Literacy: Build organizational data literacy by providing a safe environment for employees to explore data without the risk of being misled.

Use Cases

Datova's hallucination-free approach to data analysis makes it valuable across various professional contexts:

-

Financial Analysis: Generate trustworthy financial forecasts and identify spending patterns without the risk of fabricated figures.

-

Customer Insights: Analyze customer behavior trends with confidence that the patterns identified actually exist in your data.

-

Operational Efficiency: Identify genuine bottlenecks and optimization opportunities based on real operational data.

-

Market Research: Extract accurate competitive intelligence and market trends without invented statistics.

-

Compliance Reporting: Generate reliable compliance reports with verifiable data lineage for auditing purposes.

Conclusion

As AI becomes increasingly embedded in professional workflows, the distinction between systems that might hallucinate and those architecturally designed to prevent hallucinations will become increasingly important.

Datova represents a new approach to AI-powered data analysis—one that preserves the accessibility and efficiency of natural language interfaces while eliminating the risk of misleading outputs. For professionals whose success depends on making data-driven decisions, this balance of accessibility and trustworthiness isn't just convenient—it's essential.

By maintaining separate pathways for conversation and data processing, Datova ensures that every insight, statistic, and recommendation is firmly anchored in your actual data, giving you the confidence to act on AI-generated analysis without hesitation or doubt.